Vibrissal-Responsive Neurons

Experimental data of a rat whisking to explore its environment

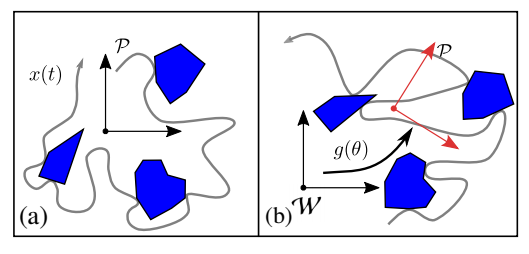

Simulated exploration strategies for an agent to localize itself in its environment

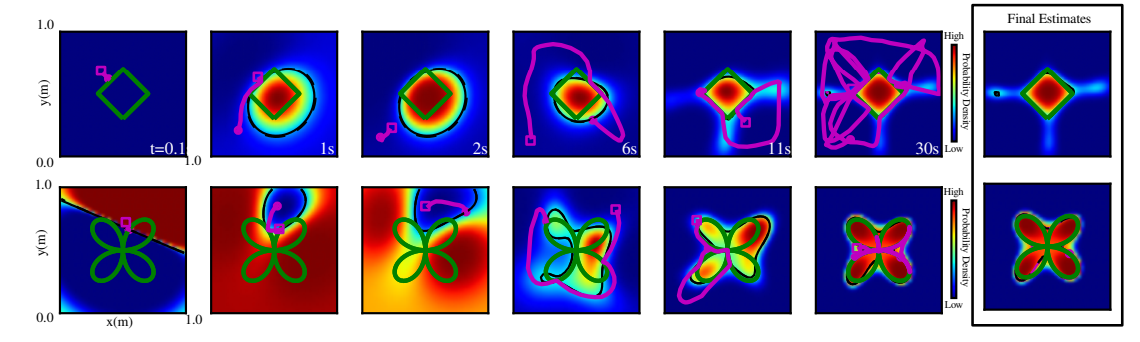

Ergodic exploration using binary sensing for shape estimation

We see because retinal ganglion cells respond to light. We hear because spiral ganglion cells respond to sound. We feel because primary somatosensory neurons respond to touch. But what is touch? Whereas light and sound can be characterized by physical parameters (amplitude, frequency, phase, and polarization), the mechanics of touch, and the manner in which primary sensory neurons encode the parameters of touch, are largely unquantified. This is a glaring gap within the entire field of somatosensation, and it occurs because mechanics are difficult to quantify. To close this gap we will use the rat vibrissal (whisker) system as a model to directly relate the responses of primary sensory neurons to the quantified mechanics of touch. Paralleling the increased use of rodents in genetic and optogenetic research, the rodent vibrissal array has become an increasingly important model for the study of touch and sensorimotor integration.

Collaborators

Mitra Hartmann, Northwestern University

Publications

Ergodic exploration using binary sensing for non-parametric shape estimation

I. Abraham, A. Prabhakar, M. Hartmann, and T. D. Murphey

IEEE Robotics and Automation Letters, vol. 2, no. 2, pp. 827–834, 2017. PDF, Video

Funding

This project is funded by the National Institutes of Health: Coding Properties of Vibrissal-Responsive Trigeminal Ganglion Neurons.

Other Projects

Active Learning and Data-Driven Control

Active Perception in Human-Swarm Collaboration

Algorithmic Matter and Emergent Computation

Control for Nonlinear and Hybrid Systems

Cyber Physical Systems in Uncertain Environments

Harmonious Navigation in Human Crowds

Information Maximizing Clinical Diagnostics

Reactive Learning in Underwater Exploration

Robot-Assisted Rehabilitation

Software-Enabled Biomedical Devices