Haptic Languages

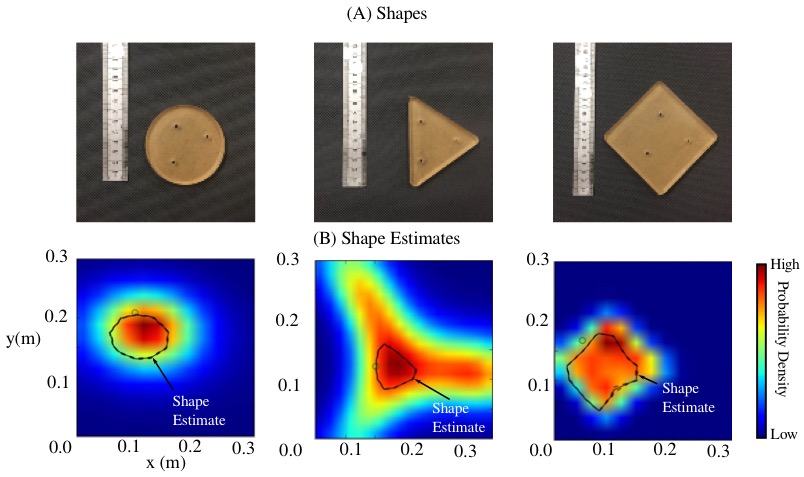

Shape estimation using binary sensing and ergodic exploration

We are interested in how robots can construct symbolic representations from mechanical contact. For instance, how can a robot feel an object with fingertip sensors and generate a symbolic representation of that object that is rich enough to identify the object in the future? How the robot runs the fingertip over the object will greatly influence the representation it gets, so determining control laws from the learning need is a major part of this research. In the image above, we focused on enabling a robot to determine the shape of an object by interacting with it and measuring only its end effector kinematics. Over a short amount of time, the shape stabilizes to a fixed representation that can then be used in the future for searching for the object. Goals of this work include the following.

• determine algorithms for actively exploring a region to determine multiple shapes of multiple objects.

• determine algorithms that can use individual representations of objects to identify and localize an object.

• determine other symbolic representations beyond shape, such as texture, that can be used for touch-based discrimination.

Collaborators

Mitra Hartmann, Northwestern University

Publications

Real-time area coverage and target localization using receding-horizon ergodic exploration

A. Mavrommati, E. Tzorakoleftherakis, I. Abraham, and T. D. Murphey

IEEE Transactions on Robotics, vol. 34, no. 1, pp. 62–80, 2018. PDF, Video 1, Video 2

Data-driven measurement models for active localization in sparse environments

I. Abraham, A. Mavrommati, and T. D. Murphey

Robotics: Science and Systems Proceedings, 2018. PDF, Video

Ergodic exploration using binary sensing for non-parametric shape estimation

I. Abraham, A. Prabhakar, M. Hartmann, and T. Murphey

IEEE Robotics and Automation Letters, vol. 2, no. 2, pp. 827–834, 2017. PDF, Video

Autonomous visual rendering using physical motion

A. Prabhakar, A. Mavrommati, J. Schultz, and T. D. Murphey

Workshop on the Algorithmic Foundations of Robotics (WAFR), 2016. PDF, Video 1, Video 2, Video 3

Funding

This project is funded by the National Science Foundation–National Robotics Initiative: Autonomous Synthesis of Haptic Languages.

Other Projects

Active Learning and Data-Driven Control

Active Perception in Human-Swarm Collaboration

Algorithmic Matter and Emergent Computation

Control for Nonlinear and Hybrid Systems

Cyber Physical Systems in Uncertain Environments

Harmonious Navigation in Human Crowds

Information Maximizing Clinical Diagnostics

Reactive Learning in Underwater Exploration

Robot-Assisted Rehabilitation

Software-Enabled Biomedical Devices